In the ever-evolving landscape of artificial intelligence and machine learning, the significance of neural architecture has taken center stage. Neural architecture serves as the blueprint that shapes artificial neural networks’ capabilities, efficiency, and performance. This comprehensive article delves into the intricate world of neural architecture, exploring its definition, key components, design principles, applications, challenges, and pivotal role in shaping the future of intelligent machines.

Defining Neural Architecture

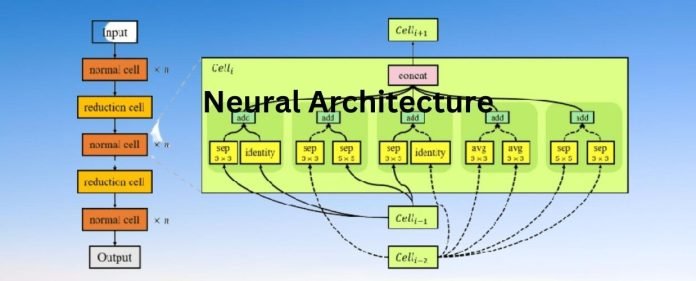

Neural architecture is at the forefront of the rapidly advancing artificial intelligence and machine learning field. As the blueprint that guides the capabilities, efficiency, and performance of artificial neural networks (ANNs), it plays a pivotal role in shaping the landscape of intelligent machines. This comprehensive exploration delves into the intricate world of neural architecture, probing its definition, crucial components, guiding design principles, diverse applications, formidable challenges, and overarching influence on the trajectory of intelligent machines.

Critical Components of Neural Architecture

When it comes to understanding neural architecture, a few key components are essential to grasp. One of the most important is the neuron, the basic building block of the nervous system.

Layers

Fundamental to the architecture of neural networks, layers serve as the elemental units that structure and process data. These layers are categorized into input, hidden, and output layers, each playing a distinct role in the data transformation process. Input layers receive and preprocess data, hidden layers extract complex features, and output layers produce the network’s final predictions or classifications.

Neurons

Mirroring biological neurons, artificial nodes, or neurons form the core computational units of neural networks. These neurons are interconnected through weighted connections that facilitate the transmission of data. Inputs received by neurons undergo computations that involve adjusting weights and applying activation functions. The resulting outputs are then propagated to the subsequent layers.

Weights and Biases

The functionality of neural networks hinges on adjustable parameters known as weights and biases. Weights modulate the strength of connections between neurons, influencing the flow of information. Biases introduce a form of baseline input that affects the output of a neuron. The iterative learning process involves optimizing these parameters to minimize prediction errors and enhance network performance.

Connectivity

The connectivity patterns within neural architecture determine how neurons within one layer are linked to those in the next layer. Common connectivity architectures include fully connected, convolutional, and recurrent connections. Each architecture type caters to specific data types and learning objectives, facilitating the network’s ability to extract meaningful features.

Design Principles of Neural Architecture

A critical aspect of understanding neural architecture is understanding its design principles. These principles guide the development of neural networks and dictate how they are structured and organized.

Depth

The depth of a neural network, characterized by its number of layers, directly impacts its capacity to capture intricate patterns in data. Deeper networks have the potential to discern complex features, yet challenges like vanishing gradients or overfitting can mar this advantage. Hence, striking a balance between depth and regularization techniques is pivotal.

Width

The width of a network, denoting the number of neurons in a layer, influences its ability to process multiple features simultaneously. Optimal width selection can expedite learning while bolstering the network’s generalization capabilities.

Skip Connections

To address the hurdles posed by vanishing gradients in deep networks, skip connections come to the fore. These connections circumvent specific layers, allowing information and gradients to flow more seamlessly. This technique not only facilitates training but also aids in the convergence of complex architectures.

Activation Functions

Incorporating non-linear activation functions is a cornerstone of neural architecture. These functions imbue the network with the capability to capture intricate relationships within data. Activation functions introduce the critical element of non-linearity, empowering neural networks to represent complex mappings between inputs and outputs.

Applications of Neural Architecture

Understanding neural architecture is crucial for developing applications that rely on machine learning, such as image recognition, natural language processing, and autonomous vehicles.

Image Classification

The realm of neural architecture has spearheaded a revolution in image classification. Convolutional neural networks (CNNs), a subtype of ANNs, have emerged as the champions of tasks such as object detection and image recognition. CNNs’ ability to process local features and hierarchies within images has paved the way for remarkable advancements in computer vision.

Natural Language Processing (NLP)

It lies at the heart of groundbreaking developments in natural language processing. Recurrent neural networks (RNNs) and transformer architectures have proven instrumental in tasks ranging from language translation and sentiment analysis to text generation. The ability of these architectures to capture sequential dependencies within language has propelled NLP to unprecedented heights.

Autonomous Systems

The bedrock of autonomous systems, from self-driving cars to intelligent robots. Neural networks facilitate real-time decision-making and path planning by enabling these systems to perceive and navigate their environments. Fusing neural architecture and sensor data allows autonomous entities to interact with and adapt to their surroundings.

Drug Discovery

In the realm of drug discovery, neural networks have proven to be invaluable tools. By predicting molecular properties and identifying potential drug candidates, these networks accelerate the time-consuming process of identifying viable compounds. It offers an efficient means of sifting through vast datasets, pinpointing blends with the highest likelihood of success.

Challenges of Neural Architecture

One of the challenges of neural architecture is designing efficient and accurate networks. Balancing these two factors can be tricky, as increasing accuracy often requires more complex models that may slow down the training and inference process.

Hyperparameter Tuning

Designing optimal neural architectures entails meticulous calibration of hyperparameters such as learning rates, dropout rates, and batch sizes. Achieving the right balance requires extensive experimentation, domain expertise, and a deep understanding of the nuances of the specific task at hand.

Computational Resources

The complexity of neural architectures demands substantial computational resources regarding processing power and memory. As architectures become more profound and broader, the need for robust hardware infrastructure becomes increasingly pronounced.

Overfitting and Underfitting

Striking the right balance between model complexity and the available data is a recurring challenge in neural architecture. Overfitting occurs when the model captures noise in the training data, leading to poor generalization. Conversely, underfitting arises when the model is too simple to capture the underlying patterns, resulting in suboptimal performance.

Future of Neural Architecture

The trajectory of neural architecture is poised for continuous innovation and refinement. As technology advances, specialized and more efficient architectures will emerge, tailored to specific domains and tasks. Automation will play an increasingly pivotal role, with methods like search (NAS) seeking to discover optimal architectures without exhaustive manual intervention. These advancements will democratize the process of neural architecture design, enabling a more comprehensive range of practitioners to harness the power of artificial intelligence.

Conclusion

In the panorama of intelligent machines, it stands as the bedrock upon which these systems are erected. Its intricate orchestration of neurons, layers, and connections underpins neural networks’ learning and generalization capacities. From deciphering images to understanding languages and navigating complex environments, it is the enabler of AI’s transformative capabilities. While formidable challenges persist, the realm of neural architecture remains rife with potential for pioneering breakthroughs and innovation.

As we navigate an era defined by intelligent machines, the evolution of neural architecture continues to forge ahead, clearing the path for devices that simulate human cognition and transcend the boundaries of what artificial intelligence can accomplish, marked by creativity and advancement, is destined to etch its indelible mark on the annals of technological progress.