The latest AI chip prototype from IBM mimics the human brain and is much more energy-efficient than the current models. This breakthrough technology can potentially revolutionize the way AI is deployed, as it can significantly reduce the power consumption of data centers.

Overview of Achievement

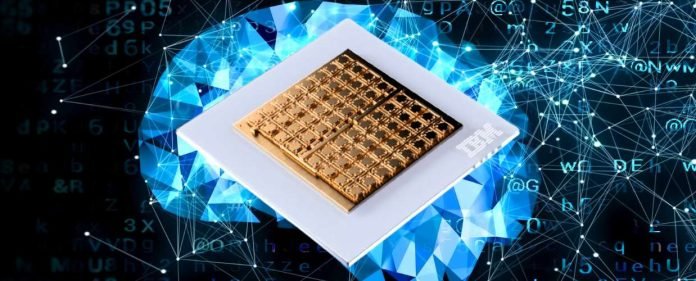

IBM has announced a breakthrough in artificial intelligence technology by developing a prototype chip that mimics the human brain. This chip is much more energy-efficient than current models and could significantly reduce the power consumption of data centers. The chip’s efficiency is attributed to its components, which operate similarly to connections in the human brain. This breakthrough technology has the potential to revolutionize the way AI is deployed, allowing for more complex tasks to be executed in low-power environments such as cars, mobile phones, and cameras.

Significant Breakthrough

According to Thanos Vasilopoulos, a scientist at IBM’s Zurich research lab, the human brain achieves a high level of performance while consuming minimal power. The new chip can replicate this efficiency, allowing for a new generation of power-efficient AI chips that will benefit a wide range of devices, including smartphones.

It could be particularly important given the concerns around emissions from data centers, as cloud service providers could leverage these chips to reduce energy costs and their carbon footprint. The prototype chip from IBM could change how we think about artificial intelligence and its energy consumption. Its energy efficiency and ability to handle complex tasks could pave the way for a more sustainable future for AI-powered devices.

Different From Other Digital Chips

The latest AI breakthrough from IBM has introduced a prototype chip that operates differently from conventional digital chips. Instead of using binary 0s and 1s to store data, this chip employs analog memristors that can store multiple values; this mimics how synapses work in the human brain, giving the chip a more natural and efficient operation. As Professor Ferrante Neri from the University of Surrey notes, this approach falls within the realm of nature-inspired computing, where technology emulates the human brain’s functionality.